Tl;dr: An error is returned when an agent tries to make a link between two entries when that agent don’t have the dependency yet. The error is below. Is it possible to have a retry mechanism for create link when the dependency hash is not yet held but can be assumed to be held at some point in the future?

{type: "internal_error", date: "Source chain error: InvalidCommit error: The dependency AnyDhtHash(uhCEk...) is not held"

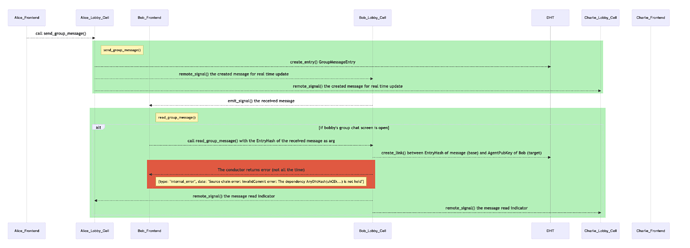

Hello! As we were testing Kizuna’s group messaging over the internet, we found some interesting bug that I thought would be nice to share here as well. And in the end of this post, there is a question/request that I think may be interesting to be pondered on.

Note:

- In our manual test over the internet, we used an AWS EC2 instance (ironic but will do for now

) for the proxy server but that is not shown in the diagram so as to not over complicate it.

) for the proxy server but that is not shown in the diagram so as to not over complicate it. - Charlie’s part is omitted since it is identical to what bob is doing here.

- Number of agent in the network: 3

each zome functions in the diagram are simplified here as well to focus on the matter at hand. However, if anyone wish to look deeper into the architecture of each zome call, you could PM me

-

send_group_message()→ sends a message (text/file/media/etc) to a group -

read_group_message()→ mark a message read by the caller by creating a link between the message and the AgentPubKey and let other members of the group know that you read the message too

Apologies in advance if the diagram is small! tried my best to make it larger  (please zoom in if it is too small)

(please zoom in if it is too small)

I can confirm that this error is coming from the zome function read_group_message() and the only API that may seem to return this error is the create_link() . And I also confirmed that the dependency that is missing is the EntryHash of the group message which is used as base.

I am not 100% certain as to why this is happening, but my hunch is that since DHT is eventually consistent, when bob tried to make a link between the message EntryHash and the AgentPubKey, he could not find the EntryHash as it was still in the process of getting published to the DHT (To be more precise, Alice is telling bob and charlie to hold the GroupMessage entry for her). However, Bob can already make a create_link() call between GroupMessage EntryHash and his AgentPubKey as he already received the hash of the GroupMessage through remote_signal() from Alice beforehand!

Even though this was a bug on our end, I am honestly really amazed at how distributed networks behave and it shows that various components of Holochain is really working!

Now, given that other projects in holochain may potentially do something similar, I have a few question/suggestion,

- What does the dependency not held really mean?

- Is it possible to have a retry mechanism for create link when the dependency hash is not yet held but can be assumed to be held at some point in the future?

ping @guillemcordoba @pauldaoust @thedavidmeister @Connoropolous