In this single-blob DHT, Is it possible to ‘link’ files?

Sure, but it’d be pretty roll-your-own (at least until someone creates a generic library for this). You’d probably do the linking in some other DHT, where those blobs are represented by a ‘reference’. This is what it might look like in the ‘main’ DHT. The UI would know about the blob’s DNA hash, because it would be responsible for creating the blob DHT. It could then feed it into the create_blob_dht_ref zome function.

struct BlobDhtRef(Address);

#[zome]

mod my_zome {

#[entry_def]

fn blob_dht_ref_entry_def() -> ValidatingEntryType {

entry!(

name: "blob_dht_ref",

description: "A reference to a single-blob DHT",

sharing: Sharing::Public,

validation_package: || {

hdk::ValidationPackageDefinition::Entry

},

validation: | _validation_data: hdk::EntryValidationData<BlobDhtRef>| {

Ok(())

}

)

}

fn create_blob_dht_ref(blob_dna_address: Address) -> ZomeApiResult<Address> {

let blob_dht_ref_entry = Entry::App(

"blob_dht_ref".into(),

BlobDhtRef(remote_blob_dht_address).into()

);

commit_entry(&blob_dht_ref_entry)

}

#[zome_fn("hc_public")]

fn link_to_blob(base_address: Address, blob_dna_address: Address) -> ZomeApiResult<Address> {

let blob_dht_ref_address = create_blob_dht_ref(blob_dht_haddress)?;

link_entries(base_address, blob_dht_ref_address, "link_to_blob", "")

}

}

@pauldaoust I am asking whether it can be linked with other files within the same single-blob DHT, means inter-linking of files. Because of the entries within this DHT are very much local to the agents, link entries would also be local, isn’t so? Or, is there a way to link entries between two different blob-DHTs?

@premjeet ohhh, I see what you’re saying. The point of a single-blob DHT is that its purpose would be to store only one file — no other files to link to. Your filesystem would look like a bunch of separate DHTs, possibly united by one ‘file table’ DHT.

You could link entries between two different blob-DHTs, but right now it would be roll-your-own because there’s no built-in link type that points to another DHT. It would probably look something like (dna_hash, entry_address, link_type, link_tag). @pospi and @lucksus both have some interesting and useful reflections on this subject: Cross-DNA links

BTW,can you explain about ‘link_type’ & ‘link_tag’ please.

@jakob.winter I just wanted to chime in to clarify for you (& everyone else) something that as a former privacy activist I think is quite important:

In a distributed system with untrusted nodes, you cannot reliably delete anything. Ever.

It matters precisely zilch that your system has some clever way of garbage collecting. Or encrypting. Or anything else. If you have sent data to someone, once it arrives at their machine then it is theirs. They might have hacked code. They might be directly accessing the Holochain storage backend. You have no way of knowing, unless perhaps you’re running signed, binary-compiled code on TPM hardware. Even then… that stuff has been hacked before, too.

It’s worth pointing out that this is the reality of today’s internet, except that currently it’s only shadowy figures and Orwellian government organisations who get to keep such information. All that systems like Holochain, Scuttlebutt and others do is level the playing field.

So, what’s coming for society at large is a very frank discussion about which data it is “polite” to access. Is it ok to read someone’s old profile data? Probably depends on the app, right? It’s a curly issue and a lot of people will probably get hurt before we figure it out. I’ve already hurt people in this way, to be frank. And I’m someone who thinks about this stuff a LOT

True. As @artbrock says: One has to assume that at some point in the future cryptography might be cracked. So any data submitted to a public DHT should not be considered save indefinitely.

Thats why it would be nice to have a freely configurable Dropbox-like hApp, where I could for example set up one “save” directory for which I personally pick each node. So the DHT of that directory might only run on the HoloPorts of myself, my brother, my best friend and my lawyer.

Thus you reduce the attack surface from hackers (they’d really have to want to attack you or your friends/family specifically) while minimizing physical risks to your data (e.g. losing your data in a fire).

That way a Holochain hApp could make any risks perfectly visible and be honest about them, giving full agency back to the user.

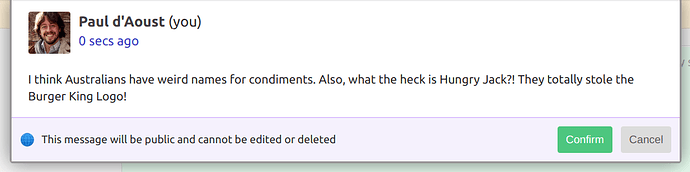

TOTALLY AGREE! This is a tough sell but ultimately necessary, I think. We want to equip people to be thoughtful about the consequences of their actions; we’re only warning them about things that centralised services are abstracting away under a user-friendly UI. I keep thinking about the weightiness of publishing in the Secure Scuttlebutt’s Patchwork UI. Let’s say we’ve got some insensitive bozo who thinks he knows everything. In a flurry of blind rage, he rattles off some dumb post about antipodean food nomenclature:

That extra step, with the warning and the ‘confirm’ button, have caused me to stop so many times. Not just because I thought about whether my remarks were emotionally sensitive, private, or risky. As often as not, it’s been because I realised I wasn’t really contributing anything novel or helpful to the conversation! It’s a lovely zen practice.

Have read this thread and it becomes a little technical.

Is it possible for a DropBox or Cubix style hApp to be created and used on Holochain?

Does Holochain support IPFS?

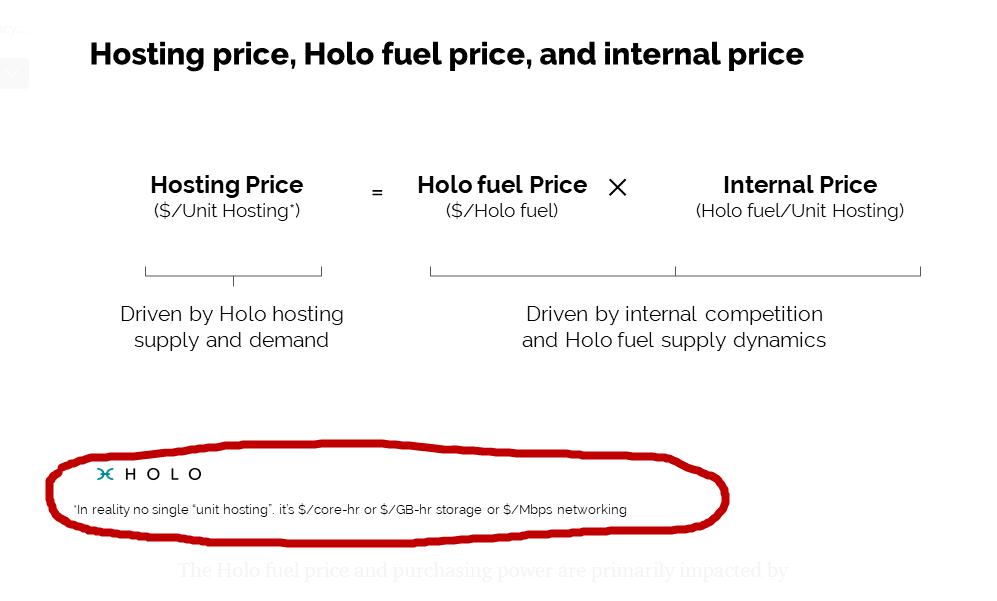

I noticed that in the pricing modelling for hosting there is a $/-GB per hour storage, is that what this would be related to?

Thanks.

A file storage hApp:

Yeah, it should be possible, especially if you use IPFS as the data storage layer. So you store all data via IPFS (where it can be deleted, if desired) and you handle all the organizational stuff (who is supposed to store which data) on the Holochain side.

As @pauldaoust suggested earlier:

IPFS would also bring another advantage: Since it is a single address space (as opposed to BitTorrent, where each file has its own), IPFS has dedoublication-functionality, meaning: Duplicates of files are automatically deleted.

Storage Negotiations:

Some thoughts about the issue of who’s required to store which bit:

-

The author publishes the data and he initial validators are required to store it as well.

-

A user can check the “keep this file” box for any data they voluntarily wanna keep. Like, I would check that box for all the music I regularly listen to (so I have a local copy and don’t have to re-download them all the time)

-

If a user (even an initial validator) doesn’t want to store a file, they can uncheck the “keep this file” box, at which point they will see a little “…finding other custodian for this file…” message.

-

The file will only be deleted from the system after A: the software has found that there are already more copies in circulation than required or B: the software has found another custodian.

Making it attractive to store stuff:

But what incentivizes people to store files they aren’t interested in themselves?

One nice way would be a transferrable reputation currency (hybrid). You earn points for storing files (with a higher reward for files close to the lower redundancy limit - the ones nobody else wants to store) and you earn points for providing that data (bandwith).

You lose points for consuming content.

So at this point its just a standard currency. But here comes the reputation part:

Accounts with lots of points will get preferencial treatment when requesting data. So if you have more points than I and we both request the same data, you’ll be served first, giving you a nicer experience, especially when streaming content.

So if my streaming experience isn’t great, I have two options:

-

I contribute more to the system (like running a HoloPort at home) to gain more points

-

I buy some points from others on a regular basis (to balance my consumption)

I particularly like this idea, because it works for both tech-affine people and users who just want to enjoy good bandwith for a price.

Some open questions I still have:

-

How do we prevent people from gaming the system by uploading shitloads of useless data, just to earn points?

-

Where do the points come from? Are they just being generated by hosting data and burned by consuming data?

I still have to think heavily about this. I have a feeling there is a gread solution. We just have to think of it

Regarding deletion what I imagine at least is information about ownership of data being distributed and then it would enable the owner permission to mark the data as deletable, that happening on some lazy distribution to reduce overhead. I am reading about current implementations of deletion just now though