related: How to run multiple agents and let them interact locally using `hc`?

As I was trying to run up multiples nodes to attempt networking, during development, on my 1 computer, and I was having issues with hc sandbox so I wanted to use holochain binary, I encountered an issue that got me stuck for a long time. Writing it up for saving my own time, and others time, who are trying this.

The NOT WORKING configuration

If I want to run holochain via the holochain binary, then I run:

RUST_LOG=info holochain -c conductor-config.yml.

Where conductor-config.yml is a file sitting in the same directory as I’m running the command and it looks like this:

environment_path: databases

use_dangerous_test_keystore: false

signing_service_uri: ~

encryption_service_uri: ~

decryption_service_uri: ~

dpki: ~

keystore_path: ~

passphrase_service: ~

admin_interfaces:

- driver:

type: websocket

port: 1234

network:

bootstrap_service: https://bootstrap-staging.holo.host

transport_pool:

- type: proxy

sub_transport:

type: quic

proxy_config:

type: local_proxy_server

network_type: quic_bootstrap

Next I install the Happ and activate an “app” websocket port on this conductor.

# Install the Happ

hc sandbox call --running=1234 install-app-bundle --app-id=main-app back/workdir/acorn.happ

# ACTIVATE THE WEBSOCKET PORT

hc sandbox call --running=1234 add-app-ws 8888

Now let’s say I have a 2-conductor-config.yml which will act as a configuration for a second node.

environment_path: databases2

use_dangerous_test_keystore: false

signing_service_uri: ~

encryption_service_uri: ~

decryption_service_uri: ~

dpki: ~

keystore_path: ~

passphrase_service: ~

admin_interfaces:

- driver:

type: websocket

port: 1235

network:

bootstrap_service: https://bootstrap-staging.holo.host

transport_pool:

- type: proxy

sub_transport:

type: quic

proxy_config:

type: local_proxy_server

network_type: quic_bootstrap

Note that I changed environment_path to databases2 to separate the two conductors disk space usage, and admin_interfaces.driver.port to 1235 to use a different networking port than the first. I thought this should be enough (but was wrong).

We would run this conductor up using RUST_LOG=info holochain -c 2-conductor-config.yml command.

Then like the first we would run these to install the Happ and activate an “app” websocket port on this conductor.

# Install the Happ

hc sandbox call --running=1234 install-app-bundle --app-id=main-app back/workdir/acorn.happ

# ACTIVATE THE WEBSOCKET PORT

hc sandbox call --running=1234 add-app-ws 8888

Now we should have two identical DNAs running on different agents, and able to discover each other via the bootstrap service https://bootstrap-staging.holo.host and able to network because they’re on the same local network, meaning they should be able to each handle incoming connections and talk to each other.

When I tried this, and I tried multiple different network configurations, I always got this terrible result:

WARN gossip_loop: kitsune_p2p::spawn::actor::gossip: msg="gossip loop error" e=KitsuneError(KitsuneError(Other(OtherError("refusing outgoing connection to node with same cert"))))

Apr 26 18:14:33.136 WARN gossip_loop:process_next_gossip: kitsune_p2p_proxy::tx2: refusing outgoing connection to node with same cert

This was flooding through my logs every 25 milliseconds or so.

I couldn’t make sense of the error, and tried posting it to the Discord chat space I am in, but no one recognized the error.

After some hours spread over two days, I started to mentally process the issue, as one little thing started to stand out in the Rust logs…

INFO kitsune_p2p_transport_quic::tx2: bound local endpoint (quic) local_cert=Cert(gkQUat..FMJG1c) url=kitsune-quic://10.0.0.140:58280

The “local_cert=Cert(gkQUat…FMJG1c)”.

This seemed related to the “node with same cert” message.

Then I realized that node 2 had a similar text:

INFO kitsune_p2p_transport_quic::tx2: bound local endpoint (quic) local_cert=Cert(gkQUat..FMJG1c) url=kitsune-quic://10.0.0.140:55921

In the floods of logs I had not been able to see this at first, especially as when I did see it I thought the kitsune-quick addresses were different so it was fine.

Now I realized the “local_cert=Cert(gkQUat…FMJG1c)” was the same.

I wondered how to the two nodes were ending up using the same “local_cert”. Where was the “local_cert” coming from?

I realized that it must be the place where Holochain tends to store its secret values and most private information, the “lair” :p. There is really something called “lair”.

It turned out, the two nodes were sharing the SAME “lair keystore”, and it was from the lair keystore that the “local_cert” which “kitsune” (the networking module) was using to perform encryption and stuff was being pulled.

In particular, the second holochain would start itself without spawning a new lair keystore, but instead satisfied itself with connecting to the currently running one, even though another holochain process already was connected to it. Gotcha!

The WORKING configuration

So, how did we fix it?

In conductor-config.yml, we changed:

from:

keystore_path: ~

to:

keystore_path: keystore

and in 2-conductor-config.yml, we changed:

from:

keystore_path: ~

to:

keystore_path: keystore2

Now we have defined, for the two conductors, a separate keystore. It is a path which will have a folder actually created at the given path, such as “keystore” and “keystore2”.

This immediately solved the problem, after restarting the conductors, and networking was suddenly working without issue!

Lesson

When you start holochain, you SHOULD always see this message (which is confusing, because it says its an error)

Apr 27 18:02:40.677 ERROR lair_keystore_client: error=IpcClientConnectError("/Users/x/code/rsm/acorn/keystore/socket", Os { code: 61, kind: ConnectionRefused, message: "Connection refused" }) file="/Users/x/.cargo/registry/src/github.com-1ecc6299db9ec823/lair_keystore_client-0.0.1-alpha.12/src/lib.rs" line=42

This is a GOOD thing, because it means that holochain WILL, after failing to connect to a “lair”, spawn/start one. And there SHOULD only be one lair keystore per holochain.

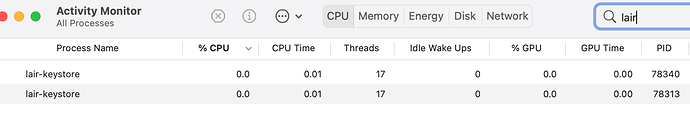

e.g. on Mac, if I open Activity Monitor and search for lair there should be the same number of lair processes as holochain processes.

If there are two holochain processes, then it should look like this:

you’re welcome. Glad it will serve

you’re welcome. Glad it will serve I set different

I set different