This post is still 404’ing for me. Can you guys open it up? Id love to take a look.

which post?

This one:

https://forum.holochain.org/t/how-to-structure-implement-a-sorted-linked-list-on-the-dht/4478/12

aaah, that is because it was a private message that i thought was a public forum thread haha

Hahha, np just grant me a cap token to view the thread

done. (would do all talks publicly now on… :-))

Sorting the data ad-hoc at the client is a terrible idea; it may work in the short term, but beyond that, for any real-world use-case, I’d highly recommend against it…

what is ‘the client’ where there are no servers?

someone needs to do the sorting, if you foist off heavy sorting ‘to the DHT’ then someone is going to be asking you to do the same at some point for them.

try to create some bounds on how much data you’re sorting at once by building granularity into large sets of data - https://docs.rs/hdk/0.0.100-alpha.1/hdk/hash_path/index.html

even better, try to include rough ordering into the granularity itself

The client in the sense that the UI consumes the DNA, so it is in essence a client to the DNA. Of course, there’re no client and servers, but what better name to give to the UI (PWA, SPA, electron app, a windows binary, or simply a CLI interface) than a client.

what is the main concern re: sorting in the UI vs. the wasm?

At https://docs.rs/hdk/0.0.100-alpha.1/hdk/hash_path/index.html,

- Holochain neighbourhoods (who needs to hold the data) center around the content address so the poor nodes closest to “chat-messages” will be forced to hold all messages (DHT hotspots)

Needs correction.

It should rather say,

“the poor nodes closest to “chat-messages” will be forced to hold the links to all messages”

They won’t be storing all messages, obviously! If that were the case, we’d be calling it a “fatspot”.

It is a hotspot in the sense that almost all users trying to read messages will have to do so through the “all-messages” entry, i.e., all users would, all day long, be calling the get-links on that one single entry (the “anchor” entry that simply says “all-messages”).

Correct me if I’m wrong though (in which case it would mean that I’ve been wrong all this time… :–(

[Indeed, one can argue that holding the links (i.e., the hashes plus some Holochain link-related data) to all messages is kinda like the same as holding all entries themselves, especially from a storage-perspective, and I agree… It indeed is a hell of a bulk load; but it’s not the same as holding all the entries themselves.]

Will be waiting the next noon… My technical doubts regarding your tree vs a linked-list approach is all that’s remaining to be sorted… Would love to finally have that clarified too.

Ideally the tree structure embeds some domain specific granularity

Examples of granularity include:

- Orders of magnitude

try to find a way to create buckets of granularity

(That way) we never need to fetch all messages because we can start as deeply down the tree as is appropriate

I get it. Makes perfect sense.

Imagine a clutter-twitter app, where people post tweets, and users should be able to see the latest tweets. It has no concept of Profiles or whatever. Everyone kinda posts in a universal feed.

Given a tree-based (“Path”-based, in HDK’s terminology) solution, users who want to read last hour’s posts can simply retrieve the entry for “1/3/2021: 23.00 to 24.00” and simply do a get-all-links on it and get all the messages posted in that hour (assuming the granularity of partitioning/pagination is 1 hour).

But the problem is that not all use-cases that the end-developers ((h)app developers) have exhibit that sort of a granularity; they simply don’t fit in that domain. Thus, the tree-style solution fails them (as it once failed me)…

Imagine that fresh Ford Model 1 cars are on sale, and that buyers would have to purchase it in exchange for US Dollars. And, for this specific use-case, imagine that no single manufacturer is churning out those cars; rather people who have a 3D-printer are printing those cars (at home) and offering it for sale at an arbitrary price that they think there hard-work is worth for; this transaction has to take place on a Holochain app (Yippee!!!).

Here’s the catch: the user has absolutely no idea what a Ford Model 1 should be worth.

One can see that a tree-based solution won’t be of any help here… For example, you may store “all-cars-for-dollars” entry, and link them to “all-cars-for-0-to-100-dollars”, “all-cars-for-100-to-200-dollars”, “all-cars-for-500-to-600-dollars” and so on… Further down, one may have other subtree-roots that say “all-cars-for-100-to-150-dollars” and so on…

Now what can you, as a rational user, query to this (h)app so as to strike the best deal? Nothing! You’re absolutely hopeless due to such an architecture…

You can make a guess and search for the subtree-root (i.e., an entry) that says “all-cars-for-0-to-100”, in which case you’d just be right on the money (meaning very lucky). But what if those 3D printers costed a billion dollars each? Well then would forever be searching in the wrong places! Plus doing a get-all-links to the rootest-root isn’t an option ('cause what would be the point then; I mean, that way we’d be back to the same problem we started with!)

Imagine another architecture of organizing such diverse prices on the DHT.

There’s an entry that says “best-deal”, to which is linked the Ford Model 1 that’s up for sale for the least amount of money. That car (or rather, the entry of that car) further links to the next best deal, and so on…

The user gets() the “best-deal” entry, gets its link (tagged “next”), and guess what? He’s found the cheapest Ford Model 1!

[Replace Ford Model 1 with, let’s say, Starbucks coffee-coupons, and US Dollars with Amazon-coupons and you’ve got the Vril’s token exchange system. Just so as a side-note…]

[Note that for this use-case, new sellers might have to be placed anywhere within the list… However, for the use case of messages, this is a great approach; in fact, I’d say even better than HDK::Path based approach. The “Latest-Message” entry would, upon the arrival (i.e., the posting) of a new message, would simply delete the previous link that it held, and rather create a new one that points to this newer message, while the new message would now (and forever from then on) hold the link of the last latest message (the previous latest message, in other words). This way, a user can read the latest message, the one posted before it, the one posted before that one, and so on… Trust me, nobody reads the messages posted on “Tuesday, July 21st 2019”; they simply scroll up and up until they reach what they had been looking for. And my experience with distributed databased has taught me to always “store data the way it would be retrieved”, i.e. time-sorted “latest-first” manner, in this example.

One doubt I have is whether deleting and creating (or simply updating, as updates are nothing but sequential deletes and creates) the link on the “latest-message” entry would be an efficient thing to do, especially from a storage perspective. Because there would always be some remnant leftovers (such as the link-headers, etc)… But, from a storage-optimization perspective, your Tree solution also ends up creating a great many “roots” and “sub-roots”, such as “all-cars-for-0-to-100-dollars” which do not hold any valuable information in and of themselves… Plus, you’ve already assured me that it’s best to ignore such silly storage-costs (How exactly does holochain support micro-payments?).

As for the hotspot thing, then yeah, I would mostly be hitting the “latest-messages-between-the-a-man-and-david” entry (substitute the string with a well-formatted chat-room entry that stores the hashes of the participants and not their names; you get the idea…) because that’s what I care about (i.e., I care about the latest messages). But note that it always has just one link. Plus it (or rather, the neighbourhood of that entry) would only be receiving get() requests from me and you; not that hot a spot. Even if the whole world were in that room, the same argument would apply to, let’s say, a famous person’s tweet/message when everyone wants to read it at the same time (i.e., would incur a huge traffic influx on that neighbourhood, so as to make their device’s wifi glow red hot).

Interesting points, althought I think the linked list approach has too many issues to be really viable.

So, from write costs: every action (create_link, create_entry, delete_entry…) creates a number of DhtOps (1 DHT OP = 1 thing that has to change in the DHT). Doing too many of these is bad not so much because of storage, but because of gossip and processing. You need to be gossiping at all times with your neighborhood to guarantee that all DHTOps stay online, and recruit new nodes if that doesn’t look likely. Those new nodes are going to need to validate it… Quite a bit of background processing. Here is how many DHTOps every action creates:

-

create_entry: 3 -

update_entry: 5 -

delete_entry: 3 -

create_link: 3

So yes, be careful when deleting things away. Also, they are not really deleted and cleaned away from the DHT, they are kept there for integrity and only “marked deleted”. So you actually gain no storage benefit.

Also, nodes or entries cannot really “react” to an DHTOp being validated and held in the DHT by themselves, it has to be one of the agents who actively takes and action after that happens. You’d have problems otherwise… Which of the 25 nodes holding the entry executes the action? How do validation rules work in that sense? What about partitions…?

About the querying of the linked list… How are they going to maintain order? Let’s say that the cheapest one is $50, and I have to commit one with $100: do I need to go over the whole list just to find the one I need to link from? What if just then the $50 one is deleted? What if two people commit two different $50 at the same time, or while being in different partitions?

While interesting and maybe useful in specific cases, in general I think this kind of approach does not really play well with the CRDT behaviour in holochain IMO, in which the data structure doesn’t depend on the “order of arrival” of events.

Another thing is that the UI may very well obscure the details of querying for paths. I think it’s been already 3 projects in which we are doing time indexing via trees of paths, and I think only one of them had explicit time range filter, the other were just infite-scrolling UX patterns like the ones you are describing.

Yeah, that so true… In fact, that’s true with even links; they leave ever-lasting waste too…

Sadly, yes… That’s why, especially for use-cases where new entries won’t have to be fit in the middle, it’s a good option. Though not so great for the ones you describe (where new data might have to be fitted within, and not at the ends)…

Exactly. That’s what I’m also saying; that there are specific use-cases for which Path is a bad option. [And somehow (rather unluckily) I run into them all the time… Haha!]

Oh, those are all just a bunch of hacks and fixes; not a cure of the disease itself!

yeah i think it needs to say ‘links to’

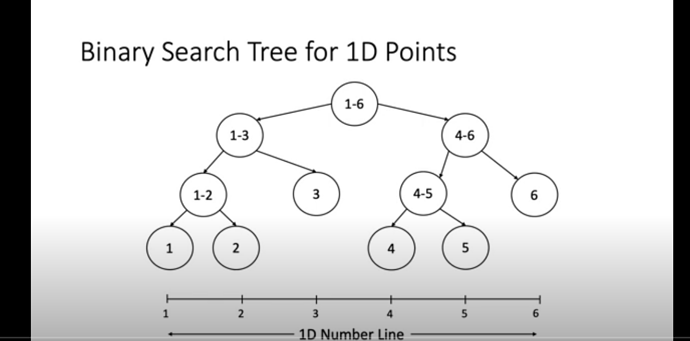

it’s a tree though with a well formed structure

0 -> all cars above MAX/2

-> all cars below MAX/2 -> all MAX/2 > cars > MAX/4

-> all MAX/4 > cars > 0 -> … MAX/16

etc.

so let’s say the path goes 4 hops down to MAX/16 per bucket ‘o’ price

as a user i want the lowest price, so i can start at MAX/16 > cars > 0

if there are zero results i can ‘zoom out’ to MAX/8 > cars > 0

if there are zero results i can ‘zoom out’ to MAX/4 > cars > 0

if there are results, i can then traverse the graph back down following the low size of each branch that is found

the logic is ‘zoom in and out’ not ‘order and enumerate buckets’

I guess something like this is what you’re talking about:

Sure indeed, at every hop we only have to process a fraction of what we had to in the previous hop; the problem space reduces to half in every step (since, in this example, we have a “binary” one). So, yeah, pretty neat zoom-out, zoom-in.

The catch is: we don’t know what the MAX is! And would never know! The market is so dynamic, after all.

Plus neither do we want to visit just about any arbitrary node; we’re only interested in the leftmost branch; so a tree would be an overkill.

you do know the max because you’re working with unsigned integers that have a maximum value

Yeah, in that sense, you’re right… That’s what I always seem to overlook!