sounds thoroughly exciting, and also beyond my capacity to imagine  My question for you, who’s obviously been thinking about it for a long time, is what’s the right level of modularity? I gather that you’re saying the usual level (“add this ecommerce plugin to your blog platform”) is the wrong level for enabling expressivity, but that there could be some modularity and re-use at the model level, and that’s what’ll make citizen coding explode? Is that right?

My question for you, who’s obviously been thinking about it for a long time, is what’s the right level of modularity? I gather that you’re saying the usual level (“add this ecommerce plugin to your blog platform”) is the wrong level for enabling expressivity, but that there could be some modularity and re-use at the model level, and that’s what’ll make citizen coding explode? Is that right?

Ok I get it, especially with what you explain after. However, is it still a possibly to build large scale applications with Holochain ? like a new fully global social network ?

The use case I would like to see happen is a new system of exchange, where people could locally connect to their local communities for exchanging goods and services and bring all the services they need for their daily lifes : could be sharing tools, organizing event, education, etc. And that includes social networking, but networking directly connected to people’s communities and physical needs. And this would be a multiple level platform, first level being the local community, then the near communities and then all other communities, to allow for inter-communities exchanges. The idea would be to re-invent the way to interact with each others, at every level and in a fully decentralized way, and reorganize our societies to disengage from central governments or institutions.

Ok, makes sense, also that there could data replication and potentially the most important / very used data potentially on all peers, and synchronized. Because going to each peer for every single piece of data seems not feasible for highly responsive apps, and you have the issues of peers being off. Can I ask if this the one place where blockchain capability to ensure data integrity and durability is used ? This is one thing for instance SOLID does not have, thus making impossible to do this data replication in a safe / durable way.

This feels just essential to me to make efficient, scalable solutions. All data is eventually relational, regardless of data storage mechanism (means : you don’t need a relational database to store relational data, and data does not have to be centralized). And indexes are just (to me) just critically essential. I am very curious to see how this is achieved, if there are resources on the topic.

Well another difference I see is that with Holochain your data is on your computer, with Solid, it is in your pod, but the pod is hosted on a pod hosting provider, usually not on your computer… which makes it no so much fully decentralized but just gives the user the choice of their provider (or be self hosted)

For the app part though, I see Solid as being like Holochain, for what I understand, since in SOLID the apps are supposed to be full front javascript apps, serveless, running on the end user. So when you “connect to a SOLID app”, it is in fact just an url used to download the client app to your computer.

The biggest difference I see is that SOLID has no blockchain like technology and thus cannot replicate data and ensuring the security and durability of the data. Does this make sense ? I feel it is important to me to understand the differences between the technologies to fully grasp Holochain.

Classical example is of a post and comments on a social networking application : If you create a post, thus data stored on your node, and many users make comment on your post, displaying your post and all the comments requires to makes N requests to N nodes to actually display the page

To reassure you, it is also beyond my capacity to imagine

But this is the beauty of it. It is an intuition I have, that we can create completely differently and that we will only fully realize what we can create when it will be actually created, not before. The idea is to stay curious and open to what wants to emerge, being driven by this curiosity and excitement and amplify it by working as groups. Working together will indeed bring the intelligence of the collective field and accelerate the emergence of what we are supposed to create for serving us all, because is comes from our deep inter-connection rather than our mere individuality, and providing we are creating with the right pure and clear intention. And trust that the real creative impulse will come from the connection to the heart, the others and our intuition and not from the mind, as we have been used to believe and were trained for. The mind is really important, but not for envisioning and creativity, this is the pitfall. The mind is here as a very powerful tool to physically create, once the creative impulse was meet though the heart. If we try to use the mind to envision, we will really limit ourselves and our potential and it will give us big headaches. It is subtle to connect to the heart rather than the mind, especially for us tech guys ! But there are practices to get there.

For me the level of modularity I am using at the moment, is to go down to data model level . Allowing the users to actually design the world they want to create and therefore all the concepts and relations between the concepts of what they want to create. It is basically data modelling. On top of which we also have all that is needed to model workflows and processes, security rules, user interfaces etc. but with a high level of flexibility

Reusable blocks that could still make sense are those which are definitely technical, like the processes for authentication for instance. And then, rather than having pretty closed modules to compose, a module approach can still be achieved and modules can be created with this model driven platform, and composed, while still being very customizable.

But to go further, which is what I am implementing at the moment on Generative Objects, is to not even make the assumption that we need data modeling, but allow the end user to actually define how they see the world and how they want to model their applications, as maybe there are other ways to model an application than starting with, or even including, a data model.

On Generative Objects, the way this is done is that Generative Objects is self-reflective : to build Generative Objects we use Generative Objects (GO). So when I want to improve GO, I open the model of GO in GO, modify it and regenerate GO with GO. It took me a year to resolve the chicken and egg problem, but now it is done

End of story : is to make the development platform as versatile as possible, so that the only limit of what can be done is the imagination of the user.

When I have finished this part, we will then be able to use GO, as a starting point, to create a brand new GO that then can be used to model and create applications in a very different way than it was on the initial GO itself !

To go even further, let’s plug an AI agent on top of it all, to go even beyond.

I don’t know if this fully clear, I am looking for ways to express it more clearly and with less words!

It’s certainly possible, and having lots of peers online at any given time would help to improve the resilience of DHT data. I do have concerns about query performance when data starts accumulating in large volumes, though, which is why I think that smaller sub-networks are probably good even in large global apps. No data to support my concerns, just a hunch

I really like your idea of fractal or tree-like organisations of community. In my vision I’d like to see the most power in the local communities (subsidiarity), and people connect to larger spheres via stewards who belong to both the local and the regional sphere when they can’t source their needs locally (@matslats has been thinking about this for a long time too).

True, that is a concern. I think most of the time application developers will have to weigh the pros and cons of each. I would think that applications with a small number of participants (like my office’s team chat app) they’d probably want to dial the redundancy up pretty high. For networks with hundreds or thousands of participants, they wouldn’t have to worry so much – if availability was the concern. Given a large enough pool of participants, the network should be able to maintain data availability/durability/redundancy automatically by enlisting more peers to hold data if the current peers are going offline all the time.

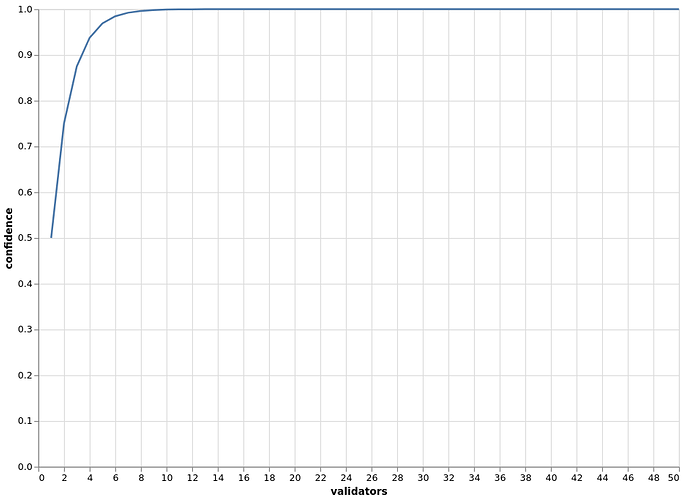

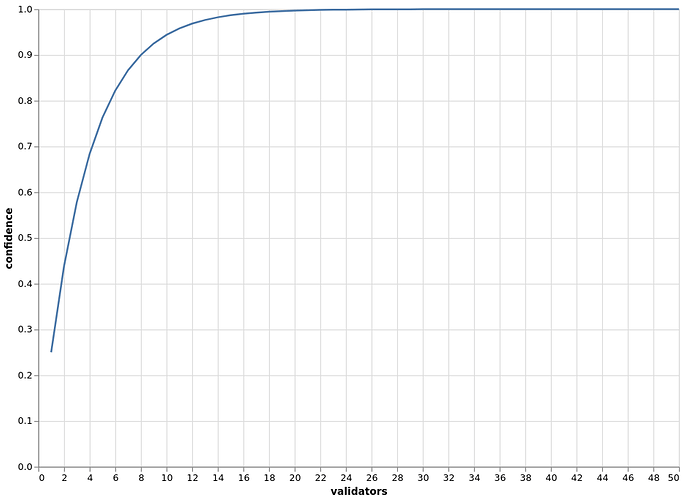

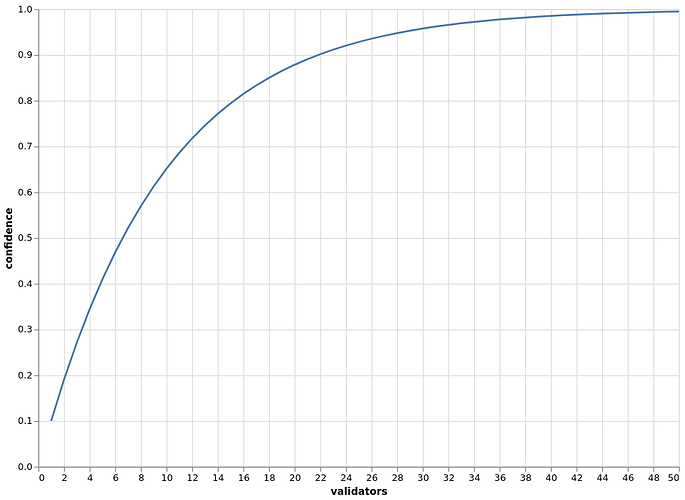

It depends on the meaning of safe – as mentioned, small networks won’t be very durable, but large networks should be. When we’re talking about safety/integrity in terms like “Alice hasn’t tried to rewrite her event journal to erase/falsify past actions”, Holochain takes an approach that’s very different from blockchain. The details are complex, but they can be reduced down to “with enough witnesses, you eventually converge on an approximation of the truth”. Here’s a sneak preview of an in-depth article I’m publishing next week.

Oh, thanks, I didn’t understand that – I thought the app could be any sort of client, whether your browser or some big server somewhere.

As far as I know, Solid’s redundancy/durability is entirely dependent on your pod provider, as you indicate. Holochain is ‘local first + peer backup + peer witnessing for bad actor detection’. So you do get the redundancy of a distributed system, but without the performance/cost issues of blockchain.

Okay, yes, and that’s a very good question, and it’s a question I have too. How expensive is it going to be to make these N requests to N nodes for N comments? Can we parallelise those fetches? (Answer: probably yes, and we might already be doing that.) Can we get clever with data structures? I can imagine that the structure of your graph database could help. For instance, links between nodes in the graph can have their own data associated with them. So I could say “get post QmAbHc8dhiel33 and all links of type post_comment attached to it”, (an entry and its links are stored on the same peer, so this is actually just one network request) and those links can have up to… I don’t know, something like 4kb, so that’d be enough for most comments, and then you could progressively load the remainder of comments that were too large, along with user icons.

Local caching (both at the Holochain level and the UI level) and peer-to-peer push messages (signals) could also be used to speed up the experience in various ways.

I like how you approach it from the heart. I think we’re scared of that nowadays, for whatever reasons – it’s too flaky, not grounded in fact, doesn’t pay the bills, etc – but I don’t know how we’re going to move forward if we don’t take a more heart-centred approach in pretty much everything we do. The head can tell us how to do stuff, but only the heart can tell us what should matter enough to bother doing. And like you say, the head isn’t great at opening up to the space of possibilities and jumping head first. I’d also say that it isn’t great at making connections between seemingly disparate subjects like the heart / right-brain is, and connection-making is going to be increasingly important as we tackle bigger and bigger challenges.

I think I understand you re: the right level of modularity – although I’d love to see an example of what it looks like to generate stuff without even an assumption that data modelling is where people would want to start! Sounds hard to believe but very interesting.

Yes scared is the word. We are in a world driven by fear, and most probably for a reason ! and not so nice one… “it’s too flaky, not grounded in fact, doesn’t pay the bills, etc” are just thoughts and thoughts are not the reality, just a projection of the mind. This is how we have been conditioned, what we have been taught, and some people and organizations, the current system, have interest that we stay in these limiting beliefs.

However, my experience is that when you start to trust, go beyond the fears, and just listen, then magic happens, and there is no more way back to the old.

And I am fully aligned with what you say. We are much more powerful that what we think we are

This is my current exploration. And again, another limiting thought (that I have but don’t believe anymore) : this is too optimistic and crazy, and I cannot even fully fathom it, so how could I create it ? However I am so much excited about this, it feels so much right, true and alive, that it is so much fun to explore and create, I can set myself up for success

so great to hear; I want to follow this project and see where it goes. We need experiments of all shapes and sizes.

Hey, I forgot to mention one possible solution to the fast querying problem: elect special ‘indexing nodes’ who hold copies of everything in a way that lets them run fast queries (including parameters, ranges, etc) on the global data set. Creates some centralisation, but it could be the right solution for some needs.

not without Ceptr

not surprising they dont have a whitepaper

exactly.

aka not fully distributed (although neither is HC in the beginning) however HC is the only available framework for getting to that point – at least that I’ve seen, if anyone finds another please lmk

would love to get Phillip Beadle and Stephen Alexander in on this convo

and dare I say, Eric…

as the backend currently stands, can we fractally divide membranes? I want to build a Happ within a Happ within a DNA bundle

beautiful!

not via linear DAG I hope

lovely

great idea, this highlights the smart “agent” concept… think of them as decentralized autonomous oracles (reCeptrs ^^)

Oh feels like a great idea. There definitively are ways and solutions for everything. The challenge is that we have to think out of the box because we have so much been used to client-server development

Oh, just this sentence makes me grasp more of HC, and get an essential point that I already but now get : the resilience, robustness and availability of the network growth with the number of peers ! Thank you  I will read with attention your article

I will read with attention your article

And the fact that HC works on a consensus amongst a sub-set of peers and we "eventually converge on an approximation of the truth” makes HC not relevant for holding a currency and financial transactions does it ? and the focus of HC is to build dApps, not to be a new currency. So if we want to include financial exchanges on a hApp, we would then integrate with another blockchain for that ?

Feels great

A Solid app does run on your internet browser, however it is a serverless frontend app, so there is no connection to a server for connecting to data, but direct connection to the pods from the browser. hApps don’t run in the browser ?

Yes I guess we have to be clever  and indexes are key. and double sided relations.

and indexes are key. and double sided relations.

for instance, for posts and comments :

on a classical database backed application, your post has many comments, the FK is on the comment, the post data table does not have any knowledge of which comments are connected to a post. You get the post and the comments by doing a join query between the post table and the comment table. On a dApp, you have to store the link to the post on the comment data, but also the link to the comments on the post data. Because otherwise, when you display your post, you would have to scan all the peers to ask them if they have comments for your post… It is pretty boring and time consuming work to do for a developer to properly manage all the relation keys in all directions. And yet these patterns are clear, this is where low code development and code generation can dramatically help ! This is just an example, I don’t know if it fully applies in HC where there is also common shared data. But on SOLID there is no share data : the data is supposed to be stored on the pod of the author, thus bringing this challenge I am talking about. And with SOLID, storing key to comments on post is, to me, already a violation of SOLID principle saying that every user owns his/her data and can revoke access at any time. It is not anymore fully true with these double sided relations because the knowledge that user X commented on post of user Y remains on user Y pod even after user X revokes access (the content is not anymore known, but the knowledge that user X commented is, which is also personal data)

But this example to bring here light to the benefits of low-code approach : we can figure out patterns to optimize performance, improve durability or whatever else we need, and then abstract them in code generation templates to benefit from low code development. And these patterns can be complex, this is not an issue because it is then automatically generated as many times as possible. However, with no low code approach, it would not be viable to have complex patterns because it would be too time consuming for a developer to develop over and over for every single case.

Heh, here’s where the waters get muddy. In reality, a PoW blockchain can only make probabilistic guarantees too – you’re basing your confidence on the faith that someone with a lot of mining power isn’t going to come along and mine a heavier chain than the one that is currently ‘official’. As far as I understand, the only blockchains that can make deterministic guarantees are the permissioned ‘classical BFT’ blockchains that require a vetted list of validators who commit each block by gaining 2/3 supermajority approval. And if you absolutely need that kind of surety, then you might be better off with one of those blockchains (or building one on Holochain, which shouldn’t be impossible).

For most real-world applications, though, probabilistic should be just fine. You can see a few graphs in the linked article that show that, with a good-guy ratio of only 50% network nodes, which is pretty terrible, you only have to contact 7 of your peers to be 99% sure of getting a ‘true’ answer about whether a past financial transaction was valid. That isn’t all that painful. Even if there are only 25% good guys, you still only need to contact 16 peers. That’s because the bad guys don’t have any control over who you choose to consult. Holochain is based on the idea that, when you have lots of computers witnessing data and freely talking to each other about what they see, it’s just too difficult to keep fraud a secret.

When only 10% of the nodes are honest, then you’ve got a problem… but it’s a problem of inefficiency, because it’s not that the it’s broken as with a 51% mining attack; you just have to do a lot more work to be 99% assured of finding an honest validator – you have to contact 43 nodes, to be precise.

You could go to a blockchain at this point, but I think it’s a lot cheaper just to prevent the proliferation of Sybil nodes. Small networks could do this via some sort of non-invasive proof-of-identity; larger networks with open membership could require each new node to submit a proof-of-work (say, 30 seconds difficulty) which would be mildly inconvenient for real people but really painful for Sybil generation.

@thedavidmeister goes into some other details that, in sum, make it really bloody hard to get away with fraud in a peer-witnessing network.

(Sorry if that was too much information; I’ve just been writing and thinking about it a lot lately and really enjoy geeking out on it  )

)

The front end of the hApp can – back-ends run in the Holochain runtime on the user’s machine; clients can be done however you like (I see a lot of people doing single-page serverless web apps).

Sounds good – code generation seems to be really valuable for advanced devs, too, who don’t want to have to write the same boilerplate over and over again and worry about whether they got it right. I admit that, in a graph database, having to manage your own referential integrity between relations could be a real pain.

Yeah, and that underscores a concern I have about data pods too. We talk about data sovereignty, but what does it really mean? There’s a lot of information that I can say is unambiguously “mine”, like the embarrassing and vulnerable entry I wrote in my journal last night, but most information I produce is actually “ours” – social graph data, financial transactions – even government-issued ID is reflective of a relationship. Not sure how Solid tackles that issue – both the ontological difficulty and the inefficiency. I haven’t looked into it deeply enough.

Once again, I’m loving your vision and your wisdom; thank you for showing up and bringing your gifts!

Wow, these are some amazing developments  HC is like web 4.0 if you ask me

HC is like web 4.0 if you ask me

Can’t wait to have the opportunity to play around with some of these tools!

geek out all you want brother, makes me feel less awkward

and so for the sake of that theme, I’m just gonna come out and say it…

blockchain is obsolete

infeasible

and most importantly, terrible for the environment!

I’m tired of the tribalism and the pressure to ‘walk on egg shells’

Should society not be able to speak up about flaws in technology?

Why would a network that gets more difficult over time ever be considered a good idea??

I will say it for those who can’t: Bitcoin is worse than worthless. Should be - $'s

The cliche of “work smart not hard” exists for a reason…

I am manifesting the day when the world wakes up and stops getting scammed by Game A elite ponzi

I would be ok if we removed the word “chain” from the brand

guess its too late bc of Holo… maybe call it HoloWorld haha play on words ^^

Even better, HoloGame! Or perhaps, SoloGame! Haha!

After all, it’s all just a game…

It is not too much  Thank you for taking the time to sharing all this, this is very valuable, I can feel more Holochain now, getting bit by bit the spirit of it ! I believe that it is more than tech, it is a reflection of the state of our evolution as human beings and the mirror of the rising of our consciousness, through technology as a matter of fact. My intention is to connect to the subtle energy of it, so that I can be part of it, and bring my best by being of service.

Thank you for taking the time to sharing all this, this is very valuable, I can feel more Holochain now, getting bit by bit the spirit of it ! I believe that it is more than tech, it is a reflection of the state of our evolution as human beings and the mirror of the rising of our consciousness, through technology as a matter of fact. My intention is to connect to the subtle energy of it, so that I can be part of it, and bring my best by being of service.

This brings me to a friction there is with current low code technologies. It kinds of grows and there seem to be a huge market for it, and yet it is not fully flowing. Especially there is resistance in hard core developers to fully embrace low code, at least all the major platform we see emerging. The reason for me is that most of the low code platforms are quite black boxes, and developers like transparency, developers likes to be allowed to open the box, understand the mechanics. And it better be high quality amazing beautiful mechanics, because developers like beauty  More than this : as developers we like to create, we are passionate artists, all we need are tools to accelerate our work and unleash our power. And current low code platform don’t allow for that. Current low code platforms are in fact limited in what can be created from them, mainly data centric, client server applications.

More than this : as developers we like to create, we are passionate artists, all we need are tools to accelerate our work and unleash our power. And current low code platform don’t allow for that. Current low code platforms are in fact limited in what can be created from them, mainly data centric, client server applications.

Also, the major low code platforms we see emerging are serving the old : they emerged from the current system to serve the current system. This is why it was important to me to open source Generative Objects, let go of my old customers and focus on bringing a new energy. Better said is : to refocus on my core initial intention and energy and bring Generative Objects with the intention of being of services of creating a new world.

I call Generative Objects (GO) a low code platform, but it is more than that. It is what I call Generative Code. In the sense that GO offers to the developer a fully open platform where he/she can actually play with every single detail, and create their own application meta-models and generation templates to model and generate any kind of family of applications on any target architecture and infrastructure. In fact : the developer will use the Core GO platform (which is low code platform for modeling and generating datacentric client server applications) to create another GO platform, another specific low code platform that will be used to create another kind of applications. For instance in our case : serverless Holochain applications. And so on, there is no more limits.

To summarize : the developers use GO to model and create the right low code platform for then end users to use to create a specific type of application. It unites developers and end users, everyone being in is highest excitement and joy, doing what they love with the most efficient tools.

This is actually possible because GO itself is self-reflected and is used to model and generate itself.

I believe that how we see data, sovereignty, mine and yours etc… Is still old paradigm. We are in the transition phase of a big shift. I foresee a world where we are truly one and where transparency, honesty, fearlessness and true sharing is the standard, and many of those things we are setting up to protect ourselves will one day vanish.

Oh thank you for receiving me, your words mean a lot to me. I am so grateful for this enfolding

Well Blockchain actually brought something precious and supporting a big shift in our way to envision the world, and made decentralization of finance actually possible, which is dismantling a pretty big piece of the old ! That’s priceless.

And yet this is just a step, a spark in the universe. I like your energy, we ought not to put on a pedestal any one or anything as who/what will save us. But stay in awe about what was created and be curious about what is coming next, knowing that nothing is permanent, all will pass, which bring the excitement for this highly creative process !

And I don’t really like hearing “yeah that’s ok that blockchain is using so much power, anyway the current financial institutions are directly or indirectly using more”. Well, that might be true but : sorry … not good enough ! We are much more powerful than that, we can move beyond this energy consumption level

Thank you @The-A-Man, @guillemcordoba for the pointers,

I have watched the CRISPR videos. I remember now @artbrock talking to me about CRISPR when we had a talk around Holochain, low code and Generative Objects.

For what I see, it is a low code platform dedicated to HC apps, and as such it talks HC language and is fully tailored and built for HC apps. And I don’t see (at least from the demos) the aspects and separation of concern for application metamodel, generation templates and generated application. Which makes sense since there was not necessarily a need for it.

Generative Objects (GO) is a generic model driven platform that can be used to model and generate any kind of application, by giving full control on the application metamodel, code generation templates and generated code, and can target any output language, therefore it can talk RUST and HC. As such, it allows to easily build very high-level end user modeling interfaces (the CRISPR interface still feels to me targeted to tech aware people), and to be able to easily enrich and improve the level of functionality that can be modeled and generated by improving or writing new application metamodels and code generation templates.

What I could do, is to prototype the integration of Holochain with Generative Objects by adding the relevant code generation templates, still using the existing GO application metamodel, with a few tweaks if needed. I could build on top of the generated code from CRISPR and extract code generation templates from there.

Before doing this, I would like the confirmation that it is actually relevant and could serve the HC community. Then I might need some support in understanding some of the details of HC apps and architecture.

@philipbeadle what do you think about this ? How do you feel about partnering in this direction ? I would love if the GO platform could be serving you to bring CRISPR even further !

Don’t know about others, but it would definitely serve me. You’ve got one customer, dude!

Go for it!

Indeed! In fact, even I at times find CRISPR intimidating… [Never worked with the web-stack: HTML, CSS, JS, Vue, what have you… I hate scripting languages (and all those frameworks that at the end produce (HTML, etc) scripts. Absolutely loathe them! But CRISPR (or Builder, whatever you call it) seems to have married to Vue&Vuetify. So it’s great that your G.O. isn’t (theoretically) locked to a certain U.I. framework; that’s the beauty of abstracting away generation-code (as you said); it’s a good design-pattern. So it’s sort of comforting that G.O., the way it has been architected, would at all time be future-ready (unlike CRISPR; citation needed)! The world is changing at breakneck speed; can’t say about next year, but I’m pretty damn sure that by the next decade, scripting-languages won’t survive; Holochain will (thankfully; which is why we’re all here, right); and as for the User Interfaces, I guess all our interactions would be taking over V.R./A.R./X.R. targeted applications, built statically as a binary for the respective hardware; sharing scripting languages was great when internet (as we know it) wasn’t a thing, but with 5G, StarLink (and the likes), etc, its ridiculous to think that we would still be sharing browser-baked scripts (like HTML/JS); not only do they restrict the amount of flexibility in what sort of output (webpage, VR experience, or whatever) you can produce, but also they cost more in transfer; I mean, there’s a limit beyond which sharing scripts becomes more costly than sharing pre-built binaries, and if you want to build a web-experience that sort-of mimics (let alone rival) the sort of output that game-engines generate, then the code that produces that output would outgrow the binary-size that you’d have had had you chosen to go the pre-compilation path (thanks to build-compressions that we can leverage when producing binaries) by orders of magnitude! I’m not saying that you ought to be generating binaries for user-interfaces; all I mean is that it’s a great decision to keep it separate and abstracted so as to be doable in the late late future…]