The point is that while I can agree with what you wrote your answer still lacks a real stance, and all in 72 words. I’ll tell you why. While it’s true that both points of view stay in an equilibrium, the balance isn’t static. Throughout history, at least not very distant one, the balance slowly shifts from belief based society toward proof based. That shift spans already centuries and was a rather bumpy road. Now, we experience another bump - the question is, what is your expected/predicted result of it in terms of the mentioned balance?

I would say my stance is that I agree with both viewpoints, and because of that, I do not really see a conflict between those viewpoints. My real stance is that there is no real conflict, to like that objective ledgers make the world more honest and fair, and to also like subjective ledgers, do not see a conflict between those.

I won’t torment you anymore as lengths of your posts seems to enter a periodic variation (62,72,63) and we risk entering an infinite loop. We can end up hitting the halting problem and proving our reality not being Turing complete  .

.

While I respect your aversion to provide any quantitative or qualitative assessment I cannot agree that both points of view, I sketched before, have equal chances and merits to continue unaltered in the future (near future). Advances in artificial intelligence will force us to depend more and more on actual data and proofs. Unless we equip our AIs with a crippling mechanism of faith/belief which is close to nonsense.

I have no aversion to that. This thread was a reply on that you said you that what you like in crypto is the absence of need to trust, and this paradoxically makes this world more honest and fair, and I can agree with that viewpoint, and I can also agree with @Brooks viewpoint that “community-based trust” has value. I like both. Resilience is a relationship-based system and Pseudonym Pairs is more of a global consensus. So my stance is that I am for both. About advances in AI, I think that the hype around AI taking over the world tends to be based on the neuron-transistor analogy, that neurons are cellular transistors, seems like they’re not and that microtubules are the transistor of the brain.

Both, mine and @Brooks, initial statements were very general and mutually exclusive. I mentioned already an equilibrium with varying balance. While you can like both, it’s neither quantitative nor qualitative assessment. Therefore, you have an aversion. But let’s drop it. Good that your word count hopped to 127, so there is no risk of the halting problem.

As to advances in AI - it’s irrelevant what is the actual computing architecture of our brains. The first order logic remains unchanged. You might run an AI on abacus as well. It would just take a helluva time to do so. The danger of AGIs becoming real isn’t immediate, but close. With proper initial setup they won’t have some, specific to humans, disadvantages. Like affinity to believe/trust in absence of proof for instance.

I don’t consider yours or @Brooks viewpoints mutually exclusive, so my stance is that I agree with both. I also don’t think the brain architecture is irrelevant to AI, given that AI is defined by human intelligence. People take AI to extremes like “mind uploading”, all of that is based on the neuron-transistor analogy, and it seems to be false and tubulin in microtubules seem to be the transistor of the brain.

You can consider whatever you want, but when you have one approach postulating belief based decision-making and the other postulating proof based decision-making they are mutually exclusive. Tertium non datur. You can’t have them in the same application/use case together. It’s similar to debates between science method proponents and theologians - the two worlds doesn’t intersect. Of course there are many who’d have a similar attitude to yours - because then everyone loves you, you express an all embracing universal understanding which is so socially correct, the dopamine gets injected into your veins.

Regarding the brain issue - it still doesn’t matter. No matter what physical device you use - logic remains the same.

I agree you cannot have the same use case or application on both global and local ledgers, I think they are distinct from one another, but not mutually exclusive. Just two separate niches. I agree with your tweet that @Brooks replied to, that absence of need to trust (a person) makes the world more honest and fair. Since tribe-centric nature makes people want to cheer for “their” mob, out of instinct, I acknowledged an all embracing universal understanding, like I also think you do. I got more interested in Holochain after you gave it credit on BitLattice/Bitcointalk, and I “solved” reallocation in Resilience after that (unproven, but how I see it. )

re: proofs, I think both BitLattice and Holochain-type ledgers have similar proof (blockchain and holochain even more, but similar still), the difference is the number of people who acknowledge the proof. Both social tools require you to believe, trust, that others will have faith in the protocol, the difference is that in one case you trust an individual and in the other a population. Really, an individual or a mob, except a very refined type of mob mediated via an exocortex.

re: brain issue, if the assumption that computation in the brain is the same as what humans have mastered so far, quantity is still a factor, otherwise you would have had technological singularity on the abacus. microtubules as transistors falsify the neuron-transistor analogy and with it a lot of the hype around imminent AI take-over.

Mutually exclusive simply means that presence of one thing in an application prohibits presence of another thing (or many other). You agreed in your first sentence to that. In second - you say that presented points of view differ, but aren’t mutually exclusive, which basically means that they can potentially be used alongside each other. Sorry, don’t sell me a fallacy.

As to BL - as I said. I’m here to debate, not to advertise. But just to clarify - BL was designed with independence from human actors in mind. Therefore, the proof in BL doesn’t depend on a number of people, they only provide computing power. That’s the actual innovation, as the decision-making process is humans independent. They only see its results.

As to the brain issue - I could have a technological singularity with abacus - it would just take a lot of time. There is actually no neuron-transistor analogy unless in some popular science descriptions. The excitation hysteresis of neuron varies in shape and propagation speed and cannot be modeled with a single transistor. However, that still doesn’t matter as what is important is the logic behind, independent of actual hardware. The hardware we have in our disposal is still very primitive, yet the major issue in simulating actual neurons (which, by the way, isn’t crucial in building AIs) is the number of interconnections, not the speed of computing units.

Please provide proofs to substantiate your claims.

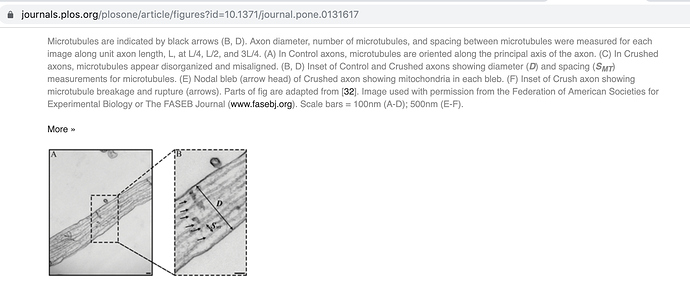

I think that global ledgers and local ledgers can be used alongside each other, yes. I do not think they are mutually exclusive, nor do I think your tweet said they were. That people choose which state to follow, is a social choice. When people converge on a state, whether it is between two people like in Holochain-type ledgers, or the whole population like in BitLattice, that is based on faith in the protocol in both cases. For example is if there were two BitLattice networks, the same technology, which of them would people have faith in? Proof-of-vote is a tool for that, to select what state to coordinate around. re: brain issue, if it’s true that tubulin in microtubules are transistors, there are billions in a single neuron, tubulin are 4.5x8 nm in size. Neurons would be more similar to integrated circuits. The idea that microtubules are computers goes back to the 1950s, it has been mostly ignored, this article on CAMKII (loss of it gives loss of long term memory) is good, it suggests phosphorylization for how tubulin switches between states, trinary. https://journals.plos.org/ploscompbiol/article?id=10.1371/journal.pcbi.1002421

I think that global ledgers and local ledgers can be used alongside each other, yes.

You introduce now a new set of things - local and global ledgers - and you agree with their ability to go alongside.

It’s a kind of abuse, I never suggested that a need to trust or its absence has anything to do with a scale of application. So, again, you agree with something that you made up - an inverted straw-man.

In blockchains people do not converge - applications they run do. The difference is whether people are allowed to provide some decision-making input or not (via said apps). The proof-of-vote you propose enables such input in a straight manner, the manner I consider useless as the issue to trust a particular solution isn’t internal to the solution itself.

With respect to the brain issue. No matter how many transistors (or structures working analogically to them) are there, that doesn’t change even a bit in logic and theory of computation. It’s only a matter of scale.

But look - https://www.cerebras.net - someone already thought about compound processing units and designed a largest processor ever made. Exactly for neural networks applications.

As to trinary processing - it may be more efficient. If there are even more states the same number of such bits can store more information. In human made applications we’ll achieve it possibly by using graphene nanoribbons. No matter however what bit base is used, the logic behind remains the same.

No I disagree with the trust/proof dichotomy, while I agree with that not having to trust a person, in Bitcoin or Ethereum or BitLattice, is good, and I also agree that relationship-based crypto, like what Holochain aspires to create, is good. @Brooks seemed to take an extreme position towards relationship-based crypto, I do not, I like both. I also have never seen you take an extreme position, your tweet just said that you like one aspect of crypto, I also like that.

Why I think the trust/proof dichotomy is false, take two separate Bitcoin networks, or Ethereum networks, or BitLattice networks, each pair is based on the same mathematical proof. How people converge on just one network, out of two or more possible ones, is by trust, “executive function” in the human brain. The Nakamoto consensus was a tool to choose what evidence trail to follow, stigmergy/swarm organization, and proof-of-vote, unproven, is an evolution of it, how I see it.

re: brain issue, I don’t know if the theory of computation that humans have invented can explain the brain (including microtubules), maybe because I lack expertise (I do) or because there is inherent uncertainty about that. It could very well be that microtubules as computers just increase the scale of computation. That is still relevant to estimates about an AI-takeover, since AI is defined based on the human condition. Google and Singularity University have been preaching an imminent technological singularity, based on the neuron-transitor analogy promoted by people like Ray Kurzweil. I bought into the neuron-transistor analogy for two years, 2012-2014, but it seems to be false, falsified by the discovery of a new information level in the cell including neurons, microtubules.

Just to clarify. I know what is your point of view. My point is that the way you express it lacks logical consequence. You cannot disagree (as long as you’d like to be logically correct) with the trust/proof dichotomy. Because trust is a belief dependent on premises of uncertain reliability, while proof based approach relies on premises of known or possible to assess reliability. I always present such extreme position when my interlocutor skews logic, it has nothing to do with my views on the subject.

The trust/proof dichotomy isn’t false just because you can side with one of trust-less solutions, as the fact that you sided is external to them. These are two worlds that doesn’t intersect. Bitlattice could be used for reputation scoring as well. It doesn’t matter however, as the inner workings of BL are logically separated from what it is used for. One extreme example - Terry Davis wrote once a fully functional operating system TempleOS. The guy was a religious fanatic, yet managed to create a correct OS using logic and tools totally detached from his beliefs (his HolyC was just a variation of C, nothing more). Thus, while belief and hard code came together, they never actually intersected, that system is still just an OS that does things other OSes do.

So, the two approaches I specified before, are mutually exclusive, either you like it or not. You can like them both, but it’s just liking.

As to the brain issue - the theory of computation explains computation (rather information theory should be mentioned here instead). It doesn’t matter what hardware is used to accomplish a computation. The base set of paradigms remains unchanged even if one uses quantum computer that depends on statistical factors. So, you lack expertise.

The neuron-transistor analogy is a tool to roughly explain what is going on under our craniums. It’s only remotely related to what actually happens, it’s false in the same way as the sentence “Earth is a sphere” (while it’s a geode). It’s like a popular physical explanation of virtual pairs which is only remotely related to the observable state of vacuum.

What I meant with “trust/proof dichotomy” is not that trust and proof are not separable as concepts, I meant it in the context of yours and @Brooks debate, that I think the dichotomy that Holochain-type ledgers are based on trust, while Bitcoin, Ethereum or BitLattice-type ledgers are based on proof, is false. Both are evidence trails, proof, and both require people to trust them, just that in one case it is relationship-based trust and in the other a social consensus, which is also trust. The innovation in Bitcoin was a proof to coordinate trust, the Nakamoto consensus, not the hash-linking or digital signatures (that are "trustless”, and used in Holochain as well. ) The lattice structure in BitLattice seems to me like an evolution of hash-linking, and the lattice cryptography an evolution of digital signatures (both trustless), but it seems to me that there is a social coordination aspect in BL too. Proof-of-vote happens to be broader consensus than proof-of-stake and proof-of-work, so I have been interested in the idea that it could integrate with BL, in the future. I am unable to access proof of whether BL has social coordination mechanisms or not, while you are not.

The reason I think the neuron-transistor analogy is false is because a lot points to that microtubules inside neurons are computers, and that neurons are more like integrated circuits, with around 1 billion molecular switches each, similar to the processor in an iPhone. CAMKII has been studied since the 1980s, it has a perfect fit onto the tubulin lattice, and it is a kinase, that adds or removes a phosphor group. https://doi.org/10.1371/journal.pcbi.1002421

Interesting discussion. I am not convinced though, that proof and trust are at opposing ends.

The more proof I have, the greater my trust will be. If a seller in an online marketplace has a high rating of delivering, like 99,9%, my trust in them delivering is rather high, even though I might be that 1.000th person that doesn’t receive their product.

Trust is about probability. With every data-point I can adjust my trust.

Also, the mechanism of trust-building works like this, I think: Transacting parties make themselves vulnerable to each other. When that vulnerability is not exploited by the other, trust is built.

Do that many times and you establish a pattern and therefore an expectation of positive behavior - you can trust each other.

Another important aspect is, that when you misbehave, the penalty will be much higher - trust is easier lost than gained. So your reputation becomes a valuable asset that cost you a lot of time and ressources to build.

I think trust doesn’t have to be based on pure faith, it can be based on quantifiable data.

It isn’t just about who I dare to interact with. Its also about making it extremely costly for someone to betray someone else’s trust - therefore positively influencing social behavior.

I am fully with @Brooks that trust is a core factor for distributed community building.

One more important bit: Trust cannot be transferred / given away like other currencies. But it can be staked. So its more like betting in a lottery.

In @Brooks example with the car purchase, when my friend (A) recomends her friend (B) as a trustworthy seller, she puts some of her reputation on the line. Should her friend (B) sell me a faulty car, not only will I establish a negative reputation for her, my friend (A) will also lose some of my trust, since she made a bad referal.

Should the transaction be a successful one though, not only will (B) gain my trust, but also my friend’s (A) trust increases, since she made a good referal.

Its all about risk management!

Of course we could just always use escrow. But that would be a way more costly, less efficient both in time and ressources.

[Edit] Trust is inherently agent-centric! It will always stay with a person. It cannot be sold / transferred. Thats why Holochain is a perfect match for trust-networks [/EDIT]

Yes, trust and proof are separable as concepts. Also, blockchains and derivate technologies are separable from their applications. It’s an important issue. Holochain, BL and other blockchains could work without any society using it. Those are just tools, could be operated by bots. Social consensus is external to them. Our debate with @Brooks is about ideas, the separate concepts mentioned above.

As to the neuron-transistor analogy - in a generalized form neurons behave like transistors. The analogy is only an approximation of their behavior. What lies beneath is ignored. And while there can be more complex processing performed there (and there are even proofs for complex analog signal processing AFAIR) it’s still irrelevant when it comes to how that processing actually works on a logical level.

I am not convinced though, that proof and trust are at opposing ends.

The issue in this debate is that we use words with different degrees of precision. Therefore, we understand same sentences differently. Let me break down meanings of the “proof” word. The dictionary definition refers mainly to the most rigorous case. But here we use “proof” in many, meanings.

- “proof” understood as the dictionary states:

- the cogency of evidence that compels acceptance by the mind of a truth or a fact;

- the process or an instance of establishing the validity of a statement especially by derivation from other statements in accordance with principles of reasoning;

- “proof” understood as a formal proof derived from axioms, it includes provable machine consensus mechanisms;

- “proof” understood as a hint based on experience.

I use it mostly in the formal sense. It’s reasonable - we discuss algorithmic applications of real world issues. To be sure about an output a whole reasoning should be formally correct. However, I seem to be the only one to use the strict version which probably leads to misunderstandings.

The example you mentioned in your first post refers to hints, not proofs. You stated “The more proof I have, the greater my trust will be”. No, your trust becomes stronger only because of the amount of hints that the other person behaves as you’d expect. These aren’t proofs in a strict sense as they are impossible to formalize. Also, you don’t have access to all prerequisites.

As to the situation with an online seller you mentioned, consider other possibilities:

- a half of votes was cast by bots coded the way to support the seller who paid for it;

- a community of buyers is very tight and the seller aligns well with the mindset direction of a majority. Thus, is more favorably treated than would be in a more diverse community;

- an algorithm calculating the rating is purposefully skewed in a way that it filters the most negative votes. An excuse from a provider is that extreme opinions harm the community.

Could invent more here, but don’t want to write a book.

Trust is about probability.

Yes, indirectly. It works when a provider of a rating system has clear benefits from clients/users receiving mostly reliable information. We discussed above the Ebay case. As long as their well-being depends on clients being happy about their shopping the system won’t be skewed. But, if for instance Ebay buys several suppliers I bet their rating might be adjusted to boost profits. Also, as I already pointed out, their milking cows sellers (big volume ones) might have their ratings adjusted as well.

So, trust is about probability only in an ideal situation when one can validate inputs of other members, validate algos and/or has direct relation with them. Otherwise, it’s easy to manipulate and far from being reliable.

Going further to your second post, you are right about how trust is built between parties. But again you refer to a situation when the parties can directly interact and build trust. Situation that gives far more hints than a possibly manipulated rating. And yet, surprises can happen, even with a party trusted for years. Because the external situation or some other factor forces it to become dishonest.

You are also right about trust as an asset. Yes, the penalty is high, which is a very good thing for everyone wanting to manipulate choices of others. I cited in one of my posts a research made by ICMP. If you are an actor/popculture star and are smart enough to get 50% of fake supporters (or smart enough to hire a person who understands how net works) you are probably smart enough to create a negative “community driven” PR for your competitors.

I think trust doesn’t have to be based on pure faith, it can be based on quantifiable data.

What quantifiable data - examples? Besides, then it becomes a proof…

One more important bit: Trust cannot be transferred / given away like other currencies. But it can be staked. So its more like betting in a lottery.

Yes, and that’s one of properties of trust - being like a lottery.

Next, you built your own version of the car example with stress laid on losing reputation. Your example would work, but only in a primary school book. In real life there’s a multitude of possible outcomes when you get a faulty car. Just to list a few:

- you excuse your friend, as friendship is more valuable for you than lost few bucks on repairs;

- you excuse her, because she knows nothing about cars and probably acted in a good faith;

- you show your disappointment and the relation cools, but you don’t spread any info out, as that was a personal situation;

- some hundreds of shades more.

When you get a good car the situation can also have many shades. Enough to mention that out of people I know I can assign different degrees of reliability when it comes to different matters. Some know cars, some pretend to know, but know little, some are total ignorants trying to hide their ignorance, some openly state their lack of knowledge in that respect.

Extending the complex and fuzzy tool the trust is onto a broader scope degrades its reliability quickly. It becomes useless.

The agent-centricity is a property of trust. The fact that Holochain is built around this concept doesn’t make ratings any more reliable, as they are external to the network. They can only be easier to implement.

Yours and @Brooks debate is about that you don’t want to meet one another, the word comes from “battere”, to battle, it is just a tribal instinct. I agree with both your viewpoints, because I happen to be focused on applications for both global ledgers and person-to-person ledgers. The innovation in Bitcoin was the Nakamoto consensus that achieved social coordination, and integrated with the human population (including assets controlled by it like computers. ) That Bitcoin, Ethereum or BitLattice could be managed by bots is false, and based on underestimating the human condition and overestimating “artificial intelligence”, many people do, lots of people believe they’ll be able to “upload their mind” into the cloud and live forever. Those memes spread via the limbic system, appeal to survival instincts and are a form of neurosis. There is evidence since the 1950s that microtubules are computers.

This is what an axon looks like, packed with microtubules arranged along the length of the axon. That “cytoskeleton”, if microtubules are information systems, would provide mechanisms for transmitting, and also processing, information from the neuron onto whatever the axon connects to. https://journals.plos.org/plosone/article/figures?id=10.1371/journal.pone.0131617